You need to sign in to do that

Don't have an account?

Settings for DataLoader Bulk API to upload a huge amounts of records

I am trying to migrate data from Jira to my Salesforce. I have to import a huge amount of tickets (~6000 records), comments (~70000 records) and attachments (~50000 records). I researched and found that I can use Data Loader with Bulk Api enabled.

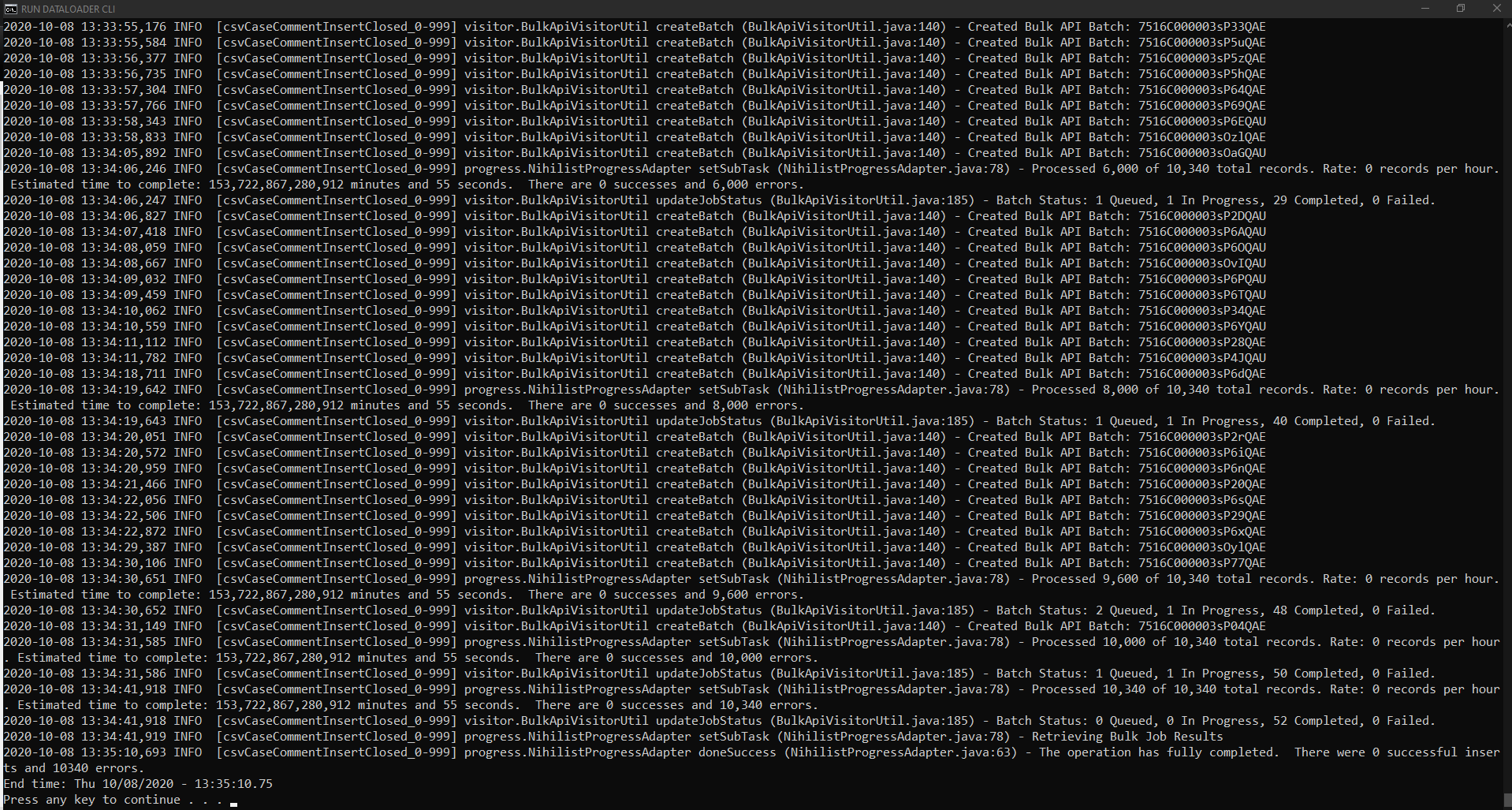

I split my data and process 1000 tickets (import 1000 cases, CaseComments for 1000 tickets, ContentVersions for 1000 tickets) at a time. I successfully upserted 1000 cases, then I tried to insert 10340 comments for that 1000 cases and I got all of them failed.

Check error log, I saw many errors:

Check error log, I saw many errors:

Please advise how to avoid exceeding the rate limit.

Thanks in advance.

I split my data and process 1000 tickets (import 1000 cases, CaseComments for 1000 tickets, ContentVersions for 1000 tickets) at a time. I successfully upserted 1000 cases, then I tried to insert 10340 comments for that 1000 cases and I got all of them failed.

CANNOT_INSERT_UPDATE_ACTIVATE_ENTITY:CaseCommentTrigger: execution of AfterInsert caused by: ConnectApi.RateLimitException: You have reached the Connect API's hourly request limit for this user and application. Please try again later. Class.ConnectApi.ChatterFeeds.postFeedElement: line 1458, column 1 Class.CaseCommentHandler: line 223, column 1 Trigger.CaseCommentTrigger: line 21, column 1This is the settings I used:

<bean id="CaseCommentInsertClosed_0-999" class="com.salesforce.dataloader.process.ProcessRunner" singleton="false">

<description>description</description>

<property name="name" value="csvCaseCommentInsertClosed_0-999"/>

<property name="configOverrideMap">

<map>

<entry key="sfdc.timeoutSecs" value="600"/>

<entry key="sfdc.bulkApiCheckStatusInterval" value="10000"/>

<entry key="sfdc.debugMessages" value="true"/>

<entry key="sfdc.debugMessagesFile" value="C:\Jira_SF_Data\DataLoader_Log\CaseCommentInsertClosed_0-999SoapTrace.log"/>

<entry key="sfdc.endpoint" value="myenpoint"/>

<entry key="sfdc.username" value="myuser"/>

<entry key="sfdc.password" value="mypassword"/>

<entry key="sfdc.entity" value="CaseComment"/>

<entry key="process.operation" value="insert"/>

<entry key="process.mappingFile" value="C:\Users\OPSWAT\OneDrive - OPSWAT\Jira2SF_Migration\Jira2SF\Mappings\CaseComment.sdl"/>

<entry key="dataAccess.name" value="C:\Jira_SF_Data\CSV_Data\CaseComment_Closed_0-999.csv"/>

<entry key="process.outputSuccess" value="C:\Jira_SF_Data\DataLoader_Log\CaseCommentInsertClosed_0-999_success.csv"/>

<entry key="process.outputError" value="C:\Jira_SF_Data\DataLoader_Log\CaseCommentInsertClosed_0-999_error.csv"/>

<entry key="dataAccess.type" value="csvRead"/>

<entry key="dataAccess.readUTF8" value="true"/>

<entry key="sfdc.externalIdField" value=""/>

<entry key="sfdc.extractionSOQL" value=""/>

<entry key="sfdc.useBulkApi" value="true"/>

<entry key="sfdc.bulkApiSerialMode" value="true"/>

<entry key="sfdc.truncateFields" value="true"/>

<entry key="sfdc.writeBatchSize" value="1000"/>

<entry key="sfdc.readBatchSize" value="100"/>

<entry key="sfdc.loadBatchSize" value="200"/>

</map>

</property>

</bean>

I found this document about Bulk API limit (https://developer.salesforce.com/docs/atlas.en-us.salesforce_app_limits_cheatsheet.meta/salesforce_app_limits_cheatsheet/salesforce_app_limits_platform_bulkapi.htm). I am using DataLoader with Bulk Api v1. But I don't think I exceed the limit with those settings or maybe I am misunderstanding.Please advise how to avoid exceeding the rate limit.

Thanks in advance.

Apex Code Development

Apex Code Development

Good to know its working now.

Try to bulkify your trigger and that should be working as long-term fix.

Examples:

https://www.biswajeetsamal.com/blog/tag/bulkify-trigger/

https://force-base.com/2016/02/03/how-to-bulkify-trigger-in-salesforce-step-by-step-guide/

Please mark as Best Answer if above information was helpful so that it can help others in the future.

Thanks,

All Answers

The Composite Batch resource is not designed for doing a large number of SObject actions.

Check below link which has details on above error message.

https://help.salesforce.com/articleView?id=000316318&language=en_US&type=1&mode=1

Few References

https://developer.salesforce.com/blogs/tech-pubs/2017/01/simplify-your-api-code-with-new-composite-resources.html

https://developer.salesforce.com/docs/atlas.en-us.api_rest.meta/api_rest/resources_composite_batch.htm

Thanks,

Vinay Kumar

I just found that the concurrent API Request Limits for Productions and Sandboxes is only 25 with a duration of 20 seconds or longer, see API Request Limits and Allocations (https://developer.salesforce.com/docs/atlas.en-us.salesforce_app_limits_cheatsheet.meta/salesforce_app_limits_cheatsheet/salesforce_app_limits_platform_api.htm). I failed because DataLoader somehow still use the default batch_size = 200 which results in 52 batch/concurrent API calls.

According to Calculate API calls consumed by Data Loade (https://help.salesforce.com/articleView?id=000333682&type=1&mode=1), if you select "Enable Bulk API", the default batch size is 2,000. I removee the following settings from my config files or set all of them to 1000 but it still failed. Please advice how to set the Batch size.

I think I am using Bulk API as recommended in https://help.salesforce.com/articleView?id=000316318&language=en_US&type=1&mode=1 by enable sfdc.useBulkApi = true in Data Loader

Good to know its working now.

Try to bulkify your trigger and that should be working as long-term fix.

Examples:

https://www.biswajeetsamal.com/blog/tag/bulkify-trigger/

https://force-base.com/2016/02/03/how-to-bulkify-trigger-in-salesforce-step-by-step-guide/

Please mark as Best Answer if above information was helpful so that it can help others in the future.

Thanks,

Hi Vinay,

I have another issue when importing a huge amount of attachments. Can you take a look at https://developer.salesforce.com/forums#!/feedtype=SINGLE_QUESTION_DETAIL&dc=APIs_and_Integration&criteria=OPENQUESTIONS&id=9062I000000IRTTQA4 and advice?

Thanks,

Tien

Sorry, this is the correct post which I mentioned in the previous comment: https://developer.salesforce.com/forums/#!/feedtype=SINGLE_QUESTION_DETAIL&dc=APIs_and_Integration&criteria=OPENQUESTIONS&id=9062I000000IRXkQAO

You seem experienced with Salesforce. Maybe you can provide good advice.

Thanks,

Tien